r. tyler and i did viz for ciel, dj ladybug, and never not romance for crip rave during the plural prototypes gray area festival.

solo viz for dj ladybug

i did solo viz for dj ladybug with much more prepared material than i typically do for a hydra set (thus i would call this a hybrid of a livecoding set)

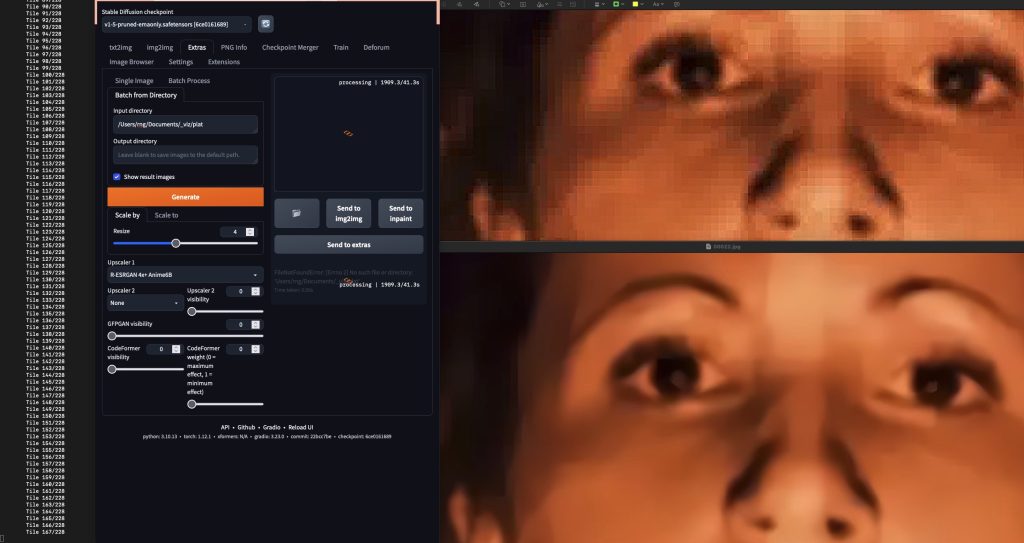

step 1: preprocessing materials. when i asked dj ladybug for inspo she referred me to val de omar’s PLAT, which was a 20 min film comprising a series of still photographs. the only source we had for this was 480p. first i upscaled the photos to 4k (n=110) in stablediffusion. this process took about 12 hours.

i then ran minterpolate over the stills to get a morphing effect so that there weren’t hard cuts.

ffmpeg -y -fflags +genpts -r 60 -i img%03d.jpg -vf "setpts=100*PTS,minterpolate=fps=60:scd=none" -pix_fmt yuv420p "minterpolatedPLAT.mp4"

i then extended the video duration to 1:15 (the approximate length of the set).

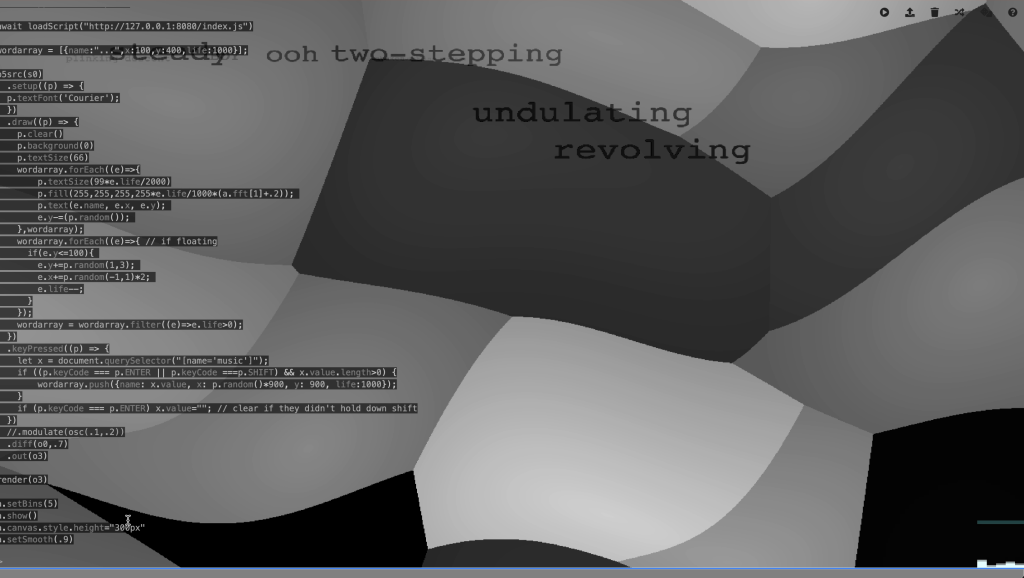

step 2: adding a text layer so an interpreter (gray area provided an access doula who professionally poeticized music into words for a hearing-impaired audience) could enter text in reaction to the music that would then display on the screen.

example of text overlay onto hydra visuals

this was a simple p5 script in hydra that required a text input element be added to the hydra browser. there’s a compilation step for running hydra locally that i haven’t really checked out yet so unless i recompiled (i think), no edits to the source js would appear in the served editor. so i wrote a script to just add the elements post-load (as well as load the midi controller hooks i would need later)

// add custom elements for js

input = document.createElement(“input”);

input.setAttribute(‘type’, ‘text’);

input.setAttribute(‘name’, ‘music’);

input.setAttribute(‘style’, ‘background-color: gray; width:74%; position:relative; left: 1%; opacity: .8’);

input2 = document.createElement(“button”);

input2.setAttribute(‘name’, ‘codevis’);

input2.setAttribute(‘style’, ‘width:23%; left:76%; top: -20px; position: relative; height: 20px; background-color: #9C9C9C; opacity: .8’);

input2.addEventListener(‘click’, function(e) {

console.log(‘Click happened for: ‘ + e.target.id);

});

var parent = document.getElementById(“editor-container”);

parent.appendChild(input);

parent.appendChild(input2);

// register WebMIDI

navigator.requestMIDIAccess()

.then(onMIDISuccess, onMIDIFailure);

function onMIDISuccess(midiAccess) {

console.log(midiAccess);

var inputs = midiAccess.inputs;

var outputs = midiAccess.outputs;

for (var input of midiAccess.inputs.values()){

input.onmidimessage = getMIDIMessage;

}

}

function onMIDIFailure() {

console.log(‘Could not access your MIDI devices.’);

}

//create an array to hold our cc values and init to a normalized value

var cc=Array(128).fill(0.5)

getMIDIMessage = function(midiMessage) {

var arr = midiMessage.data

var index = arr[1]

console.log(‘Midi received on cc#’ + index + ‘ value:’ + arr[2]) // uncomment to monitor incoming Midi

var val = (arr[2]+1)/128.0 // normalize CC values to 0.0 – 1.0

cc[index]=val

}

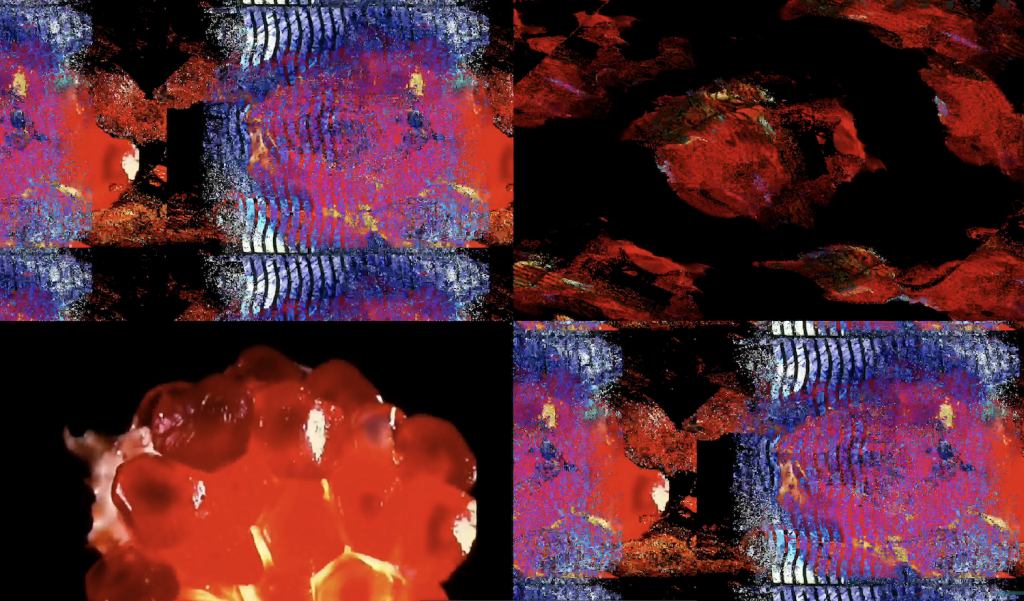

step 3: finally running the set. this is the first set where i extensively used the midi controller (thanks lucy!) and mapped multiple variables to the output. p5 crashed a lot less in hydra since i was using this integration. my concept was to start with a sort of portal/window that expanded and contracted early in the set and slowly took over more of the screen towards the end. the portal aperture was midi-controlled, as was the opacity of the text (though it also had an audio-reactive multiplier). the final presented image was distorted/modulated to the fft (onboard mic input, yuck but i don’t have a better solution rn).

it definitely felt weird to not livecode as much but i didn’t think it was appropriate for the audience and i suppose it worked out because the interpreter was often on my keyboard anyway.

collaborative viz for ciel

we used a capture card to take r. tyler’s hdmi output (gyro-controlled touchdesigner) into hydra and used my laptop as final output. i found this a bit stressful because we didn’t totally rehearse it but it worked well i think. i mostly added recursion and a bit of color tuning (accessible via midi controller) as i didn’t want to add too many elements.

i thought it was unexpected that the capture card input came into hydra via an “initCam()” but other than initial confusion about finding the source, it worked seamlessly <3